Video Communication

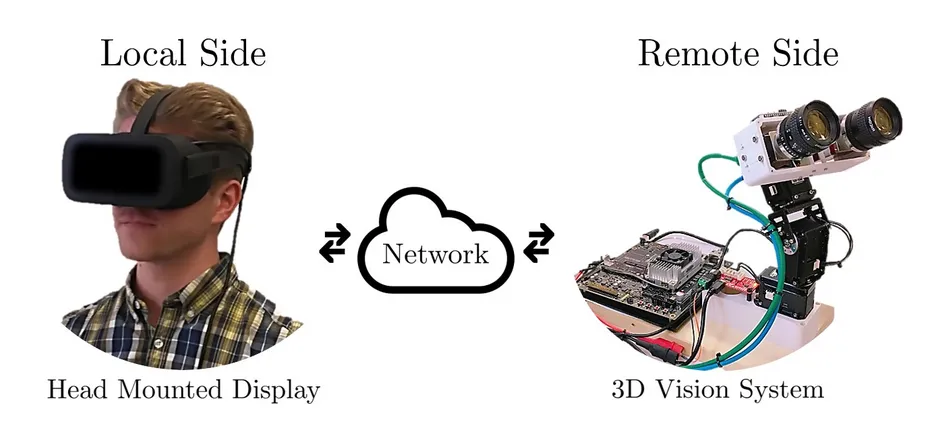

Our research activities focus on the next generation of video communication. The two main trends that are going to define the upcoming years in this research field are ultra-low latency and 360-degree stereo video streaming. Both technologies together enable a multitude of novel use cases: you can for example teleoperate a robot in a dangerous (i.e. hazardous) environment, perform telesurgery at previously unknown levels of precision, or even do remote sports such as drone racing. In all scenarios, you can freely look around without suffering from motion sickness, and enjoy the advantages of being able to visually perceive depth in the scene you are operating in. To provide these technologies, we investigate novel coding schemes such as convolutional neural networks (CNN), coding rate-distortion-complexity tradeoffs, delay compensation, head motion prediction using neural networks, and many more. This is a selection of our recent topics:

- Highly accurate latency measurement in video communication

- Analysis of delay contributors in video transmission

- Delay minimization in video transmission

- Evaluation of psychophysical bounds of the human visual system in terms of latency and frame rate

- Setting up frameworks for real-time 360-degree video streaming, including a Pan-Tilt-Roll Unit that replicates the movement of the user’s head

- Delay compensation for 360-degree real-time video transmission using visual buffers

- Head-motion prediction for 360-degree video consumption

- Analysis of the potential of compressive sampling compared to state-of-the-art video codecs

- CNN based color image demosaicing (encoder-side enhancement)

- Decoder-side image and video quality enhancement with CNN

- End-to-end CNN based image and video compression schemes