Duckietown

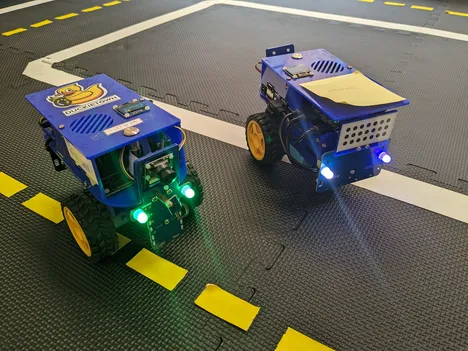

Duckietown, is an environment for studying autonomous driving in a scaled-down manner. It consists of Duckiebots that can move autonomously in a modular environment. The Duckiebots are robots consisting of sensors and motors controlled by an NVIDIA Jetson Nano.

We use this environment to visualize our research in the field of application specific MPSoCs. As a first use case we want to investigate and demonstrate the capabilities and the behavior of our hardware-optimized learning classifier tables (LCTs). These are rule-based reinforcement learning (RL) engines developed in our IPF project.

Initially, we analyze the image processing pipeline of the Duckiebots and apply our LCTs as additional controllers in the autonomous driving application of the Duckiebots. Based on the status of the Duckiebots, the LCTs should help to learn specific behaviors by influencing relevant parameters of the processing pipeline. In a first step, student works use a software version of the LCTs. In subsequent steps the Duckiebots should be extended by an FPGA board, which enables a hardware-based and independent realization of the LCTs.

Involved Researchers

How to get involved?

As we use Duckietown as a basis for our research and also to demonstrate results in the currently hot application domain of autonomous driving, there are plenty of opportunities for students to contribute.

You can get involved depending on your level of experience / study progress:

- Already during the bachelor phase you can practically apply your knowledge from LinAlg, StoSi and Regelungstechnik while you improve your coding skills and aquire knowledge about hardware architectures and autonomous driving. Get in touch with us, to talk about open tasks and to get access to our physical setup.

- Towards the end of your bachelor, it's the first time that you have to write a thesis. For that, have a look at open BA topics below.

The same applies for research internships (FPs) and master theses (MAs). - Depending on the currently planed steps, there might be tasks, which can't be assigned as BA, FP or MA. In such cases we also offer paied working student jobs. Open positions of that type are also listed below.

Thesis Offers

Interested in an internship or a thesis? Please send us an email.

The given type of work is just a guideline and could be changed if needed.

From time to time, there might be some work, that is not announced yet. Feel free to ask!

Assigned Theses

Duckietown - DuckieVisualizer Extension and System Maintenance

Beschreibung

At LIS, we leverage the Duckietown hardware and software ecosystem to experiment with our reinforcement learning (RL) agents, known as learning classifier tables (LCTs), as part of the Duckiebot control system. More information on Duckietown can be found here.

In previous work, we developed a tool called DuckieVisualizer to monitor our Duckiebots, evaluate their driving performance, and visualize and interact with the actively learning RL agents.

This student assistant position will involve extending the tool and its respective interfaces on the robot side by further features, e.g., more complex learning algorithms or driving statistics. The underlying camera processing program should also be ported from Matlab to a faster programming language to enable real-time robot tracking. Furthermore, more robust Duckiebot identification mechanisms should be considered.

Besides these extensions to the DuckieVisualizer, the student will also do some general system maintenance tasks. This may include the hardware of the Duckiebots and their software stack, for example, merging different sub-projects and looking into quality-of-life improvements to the building process using Docker. Another task will be to help newly starting students set up their development environment and to assist them in their first steps. Finally, the student can get involved in expanding our track and adding new components, e.g., intersections or duckie pedestrian crossings.

Voraussetzungen

- Understanding of networking and computer vision

- Experience with Python, ROS, and GUI development

- Familiarity with Docker and Git

- Structured way of working and strong problem-solving skills

- Interest in autonomous driving and robotics

Kontakt

michael.meidinger@tum.de

Betreuer:

Duckietown - Improved Distance Measurement

Beschreibung

At LIS, we leverage the Duckietown hardware and software ecosystem to experiment with our reinforcement learning (RL) agents, known as learning classifier tables (LCTs), as part of the Duckiebot control system. More information on Duckietown can be found here.

We use a Duckiebot's Time-of-Flight (ToF) sensor to measure the distance to objects in front of the robot. This allows it to stop before crashing into obstacles. The distance measurement is also used in our platooning mechanism. When another Duckiebot is detected via its rear dot pattern, the robot can adjust its speed to follow the other Duckiebot at a given distance.

Unfortunately, the measurement region of the integrated ToF sensor is very narrow. It only detects objects reliably in a cone of about 5 degrees in front of the robot. Objects outside this cone, either too far to the side or too high/low, cannot reflect the emitted laser beam to the sensor's collector, leading to crashes. The distance measurement is also fairly noisy, with measurement accuracy decreasing for further distances, angular offsets from the sensor, and uneven reflection surfaces. This means that the distance to the other Duckiebot is often not measured correctly in the platooning mode, causing the robot to react with unexpected maneuvers and to lose track of the leading robot.

In this student assistant project, the student will investigate how to resolve these issues. After analyzing the current setup, different sensors and their position on the robot's front should be considered. A suitable driver and some hardware adaptations will be required to add a new sensor to the Duckiebot system. Finally, they will integrate the improved distance measurement setup in our Python/ROS-based autonomous driving pipeline, evaluate it in terms of measurement region and accuracy, and compare the new setup to the baseline.

These modifications should allow us to avoid crashes more reliably and enhance our platooning mode, which will be helpful for further development, especially when moving to more difficult-to-navigate environments, e.g., tracks with intersections and sharp turns.

Voraussetzungen

- Basic understanding of sensor technology and data transmission protocols

- Experience or motivation to familiarize yourself with Python and ROS

- Structured way of working and strong problem-solving skills

- Interest in autonomous driving and robotics

Kontakt

michael.meidinger@tum.de

Betreuer:

Duckietown - Improved RL-Based Speed Control

Beschreibung

At LIS, we leverage the Duckietown hardware and software ecosystem to experiment with our reinforcement learning (RL) agents, known as learning classifier tables (LCTs), as part of the Duckiebot control system. More information on Duckietown can be found here.

In previous work, the default deterministic controller for the robot's speed was replaced by an RL agent. It uses an LCT with current speed as the system state and actions that cause relative speed changes. To enable platooning via this agent, a second version includes the distance to a Duckiebot driving ahead as a second entry to the state vector. With this limited set of rules and a modified SARSA/Q-learning method for Q-value updates, the agent quickly learns to let the bot drive at a given target speed.

However, the RL-based speed controller does not consider the track's properties. A given target speed might be too high to take turns properly, especially when the agent is eventually combined with the separately developed steering agent. On the other hand, consistently letting the robot drive slowly would be a waste of time on a mostly straight track.

To resolve this problem, this thesis should introduce the curvature of the track into the state observation. To detect curves in the camera images, the student will extend the image processing pipeline beyond how the steering mechanism takes care of turns. The student will expand the rule set, adapt the reward function, and investigate the effects on learning performance. A likely consequence of a more extensive rule set is the need for a more advanced Q-value update method. Additional improvements might concern the speed measurement accuracy of the wheel encoders. The enhanced speed controller will be evaluated for learning speed, driving performance in terms of deviation from target speed in different scenarios, and resource utilization.

With these extensions to the speed controller, those to the steering controller, and the object recognition algorithm developed in further student work, we will set the foundation to deploy our RL-based Duckiebots in more difficult-to-navigate environments, e.g., tracks with intersections and sharp turns.

Kontakt

michael.meidinger@tum.de

Betreuer:

Duckietown - Real-Time Object Recognition for Autonomous Driving

Beschreibung

At LIS, we leverage the Duckietown hardware and software ecosystem to experiment with our reinforcement learning (RL) agents, known as learning classifier tables (LCTs), as part of the Duckiebot control system. More information on Duckietown can be found here.

In previous work, an algorithm to detect obstacles in the path of a Duckiebot was developed. It uses its Time-of-Flight (ToF) sensor for general obstacles in front of the robot. It can also specifically detect duckies in the camera image, mainly used for lane detection, by creating a color-matching mask with bounding rectangles within a certain size range and position on the track. If the robot detects any obstacle in its path, it slows down, then stops and waits for the obstacle to disappear if it is too close.

While this algorithm works well for large obstacles right in front of the ToF sensor and duckies on straight tracks, it struggles to detect obstacles in other scenarios. The ToF sensor only covers a narrow measurement region and misses objects that are off-center or too high/low. Its measurement results are also not very reliable, especially for higher distances. The camera-based recognition sometimes interchanges duckies with the track's equally yellow centerline. It can fail to detect them due to blind spots (caused by a dynamic region of interest, not an actual blind spot of the camera) when driving on a curved track.

We also want to include other objects besides duckies in our recognition algorithm, e.g., stop lines or traffic signs at intersections. Since the camera approach is tuned to the size and color of duckies, manual effort would be needed to extend it to different objects. Therefore, in this Bachelor's thesis, we want to overhaul our object recognition to be more reliable and detect various objects in different scenarios. A good approach could be using the YOLO (You Only Look Once) algorithm, a single-pass real-time object detection algorithm based on a convolutional neural network. There is also some related work around this topic in the Duckietown community.

The student will start with a continued analysis of the problems of the previous method and literature research regarding viable object detection algorithms. Afterward, they will implement and integrate the selected algorithm into the existing framework. Depending on the approach, some training will be necessary. The student will start with a continued analysis of the current problems and literature research regarding viable object detection algorithms. Afterward, they will implement and integrate the selected algorithm into the existing framework. Depending on the approach, some training will be necessary. Furthermore, they will evaluate the detection pipeline for accuracy in different scenarios, latency, and resource utilization. Once a reliable detection system is established, our system can be extended to more complex behavior, such as circumnavigating duckies or responding to intersectional traffic signs.

Voraussetzungen

- Experience with Python and, ideally, ROS

- Familiarity with neural networks and computer vision

- Structured way of working and strong problem-solving skills

- Interest in autonomous driving

Kontakt

michael.meidinger@tum.de

Betreuer:

Duckietown - Improved RL-based Vehicle Steering

Beschreibung

At LIS, we leverage the Duckietown hardware and software ecosystem to experiment with our reinforcement learning (RL) agents, known as learning classifier tables (LCTs), as part of the Duckiebots' control system (https://www.ce.cit.tum.de/lis/forschung/aktuelle-projekte/duckietown-lab/).

More information on Duckietown can be found at https://www.duckietown.org/.

In previous work, an LCT agent to steer Duckiebots has been developed using only the angular heading error for the system state. In this Bachelor's thesis, the vehicle steering agent should be improved and its functionality extended.

Starting with the existing Python/ROS implementation of the RL agent and our image processing pipeline, multiple system parts should be enhanced. On the environment side, detecting the lateral offset from the center of a lane should be improved for reliability. This will require an analysis of the current problems and some adaptations in the pipeline, possibly some hardware changes.

With more reliable lane offset values, the agent's state observation can also include it, allowing us to move further from the default PID control towards a purely RL-based steering approach. This will involve modifications to the rule population, the reward function, and potentially the learning method. Different configurations are to be implemented and evaluated in terms of their resulting performance and efficiency.

The thesis aims to shift the vehicle steering entirely to the RL agent, ideally reducing the effort for manual parameter tuning while being comparable in driving performance and computation effort.

Voraussetzungen

- Experience with Python and, ideally, ROS

- Basic knowledge of reinforcement learning

- Structured way of working and problem-solving skills

Kontakt

michael.meidinger@tum.de

Betreuer:

Completed Theses

Kontakt

michael.meidinger@tum.de

Betreuer:

Betreuer:

Kontakt

michael.meidinger@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

michael.meidinger@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

michael.meidinger@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

michael.meidinger@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de

Betreuer:

Kontakt

flo.maurer@tum.de