- 21/21RTL Simulation of High Performance Dynamic Reconfiguration: A Video Processing Case Study. Reconfigurable Architectures Workshop (RAW), 2013 more… BibTeX

- 20/21Egomotion compensation and moving objects detection algorithm on GPU. International Conference on Parallel Computing (ParCo), 2011 more… BibTeX

- 19/21Dynamic Partial Reconfiguration of Xilinx FPGAs Lets Systems Adapt on the Fly. Xcell Journal, 2010 more… BibTeX

- 18/21AutoVision - Reconfigurable Hardware Acceleration for Video-Based Driver Assistance. In: Platzner, Teich, Wehn (Editors): Dynamically Reconfigurable Systems. Springer Verlag, 2010 more… BibTeX

- 17/21FPGA-based Real-time Moving Object Detection for Walking Robots. 8th IEEE International Workshop on Safety, Security and Rescue Robotics (SSRR), 2010 more… BibTeX

- 16/21Lessons Learned from last 4 Years of Reconfigurable Computing. Dagstuhl Seminar Proceedings 10281 on Dynamically Reconfigurable Architectures, 2010 more… BibTeX

- 15/21Towards rapid dynamic partial reconfiguration in video-based driver assistance systems. 6th International Symposium on Applied Reconfigurable Computing (ARC), 2010 more… BibTeX

- 14/21AutoVision – a reconfigurable system on chip for future situation adaptive video based driver assistance systems. EDAA/ACM PhD forum at the DATE Conference, 2010 more… BibTeX

- 13/21In-flight verification of CCSDS based on-board real-time video compression. 61. International Astronautical Congress (IAC), 2010 more… BibTeX

- 12/21AutoVision – a reconfigurable system on chip for future situation adaptive video based driver assistance systems. SIGDA/EDAA PhD forum (DATE), 2010 more… BibTeX

- 11/21Stereo Vision Based Vehicle Detection. VISIGRAPH 2010: International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 2010 more… BibTeX

- 10/21High performance FPGA based optical flow calculation using the census transformation. The Intelligent Vehicles Symposium (IV), 2009 more… BibTeX

- 9/21IP-based Driver Assistance Camera Systems - Architecture and Network Design. IEEE International Conference on Networking, Sensing and Control, 2009 more… BibTeX

- 8/21Toward Contextual Forensic Retrieval for Visual Surveillance. 10th International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS), 2009 more… BibTeX

- 7/21Segmentation through Edge-Linking - Segmentation for Video-based Driver Assistance Systems. International Conference on Imaging Theory and Applications (IMAGAPP), 2009 more… BibTeX

- 6/21A comparison of embedded reconfigurable video-processing architectures. International Conference on Field Programmable Logic and Applications (FPL), 2008 more… BibTeX

- 5/21A multi-platform controller allowing for maximum dynamic partial reconfiguration throughput. International Conference on Field Programmable Logic and Applications (FPL), 2008 more… BibTeX

- 4/21Hardware/software architecture of an algorithm for vision-based real-time vehicle detection in dark environments. Design, Automation & Test in Europe (DATE), IEEE Press, 2008 more… BibTeX Full text ( DOI )

- 3/21Reconfigurable HW/SW Architecture of a Real-Time Driver Assistance System. Reconfigurable Computing: Architecture, Tools and Applications (Lecture Notes in Computer Science 4943), Springer, 20084th International Workshop, ARC, 149-159 more… BibTeX Full text ( DOI )

- 2/21Reconfigurable Processing Units vs. Reconfigurable Interconnects. Dagstuhl Seminar on Dynamically Reconfigurable Architectures, 2007 more… BibTeX

- 1/21Autovision-A Run-time Reconfigurable MPSoC Architecture for future Driver Assistance Systems. it - Information Technology Journal (3), 2007 more… BibTeX

AutoVision – A Run-time Reconfigurable MPSoC Architecture for Future Driver Assistance Systems

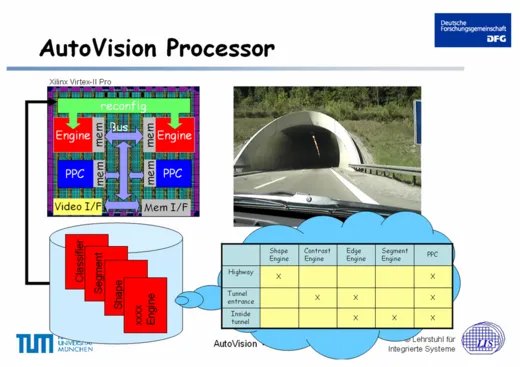

The Autovision architecture is a new Multi Processor System-on-Chip (MPSoC) architecture for video-based driver assistance systems, using run-time reconfigurable hardware accelerator engines for video processing. According to various driving conditions (highway, city, sunlight, rain, tunnel entrance) different algorithms have to be used for video processing. These different algorithms require different hardware accelerator engines, which are loaded into the Autovision chip at run-time of the system, triggered by changing driving conditions. We investigate how to use dynamic partial reconfiguration to load and operate the right hardware accelerator engines in time, while removing unused engines in order to save precious chip area.

Introduction

In future automotive systems, video-based driver assistance will improve security. Video processing for driver assistance requires real time implementation of complex algorithms. A pure software implementation does not offer the required real time processing, based on available hardware in automotive environments. Therefore hardware acceleration is necessary. Dedicated hardware circuits (ASICs) can offer the required real time processing, but they do not offer the necessary flexibility. Video algorithms for driver assistance are not standardized, and never might be. Algorithmic research is expected to go on for future years. So a flexible, programmable hardware acceleration is needed. Specific driving conditions, e.g. highway, country side, urban traffic, tunnel, require specific optimized algorithms. Reconfigurable hardware offers high potential for real time video processing and its adaptability to various driving conditions and future algorithms.

Today’s systems for driver assistance offer features as adaptive cruise control and lane departure warning. Both video cameras and radar sensors are used. On highways and two-way primary roads a safe distance to previous cars can be kept automatically over a broad speed range However, for complex driving situations and complex environments, e.g. urban traffic, there are no established and reliable algorithms. This is a topic for future research.

Algorithms for video processing can be grouped into high level application code and low level pixel operations. High level application code requires a high degree of flexibility and, thus, is good for a standard processor implementation. Pixel manipulation on the other hand requires applying the same operation on many pixels and, thus, seems to be a good candidate for hardware acceleration.

Hardware reconfiguration

In general, a large number of different coprocessors could be implemented on a System-on-Chip (SoC) in parallel. However, this is not resource efficient, as depending on the application, just a subset of all coprocessors will be active at the same time. With hardware reconfiguration, hardware resources can be used more efficiently. Coprocessor configurations can be loaded into a FPGA whenever needed, depending on the application.

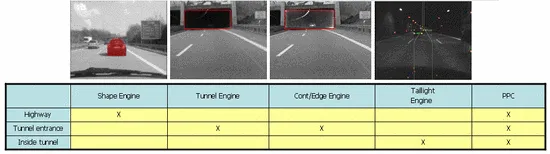

Let us look at the following driving scenario: A car is driving daytimes on a highway and entering a tunnel. On the highway other road users, e.g. cars, trucks, bikes, motorbikes, pedestrians, animals, have to be recognized and distinguished. We started to use MPEG-7-based Region Shape Descriptors for this task. The pixel-level processing can be accelerated by a coprocessor, called ShapeEngine. If the road is leading into a tunnel, the tunnel entrance will be marked as Region of Interest (ROI).

The tunnel entrance itself is rather dark, the surrounding is bright. The most important area is the road inside the tunnel, behind the entrance, where edge detection could be used to detect obstacles. While vertical edges and vertical luminance structures mainly belong to the road and to lane markers, horizontal edges mainly belong to obstacles, e.g. vehicles in the lane. The related pixel-level processing can be accelerated by a coprocessor, called EdgeEngine.

Inside the tunnel, due to the low luminance level, only the lights of other vehicles can be detected. Headlights, rear lights, and fixed tunnel lights have to be distinguished. This is done by luminance segmentation and measurement of position and movement of light areas. The processing on pixel-level can be accelerated by a coprocessor, called SegmentEngine.

The coprocessors are used as shown in table 1:

Our target hardware architecture will use a Xilinx Virtex-II Pro FPGA with two embedded PowerPC (PPC) cores. PPC-1 will be used for high level application code, PPC-2 will be used for control and management functions. The configurable logic parts of the FPGA will be used for coprocessors, on-chip bus, data I/O, and memory interface. On-chip Block RAM (BRAM) will be used for local memory with the coprocessors. Loading and replacement of the Engines will be controlled PPC-2.

Taillightdetection

Optical Flow

Acknowledgments

Exceedingly we want to thank Xilinx research labs for providing development boards for our research activities.

Dissertation

Dissertation Christopher Claus:

"Zum Einsatz dynamisch rekonfigurierbarer eingebetteter Systeme in der Bildverarbeitung"

(ext. Link...)