Topics Projektpraktikum Cognitive Systems

In order to discuss your interest in the topics or any other questions, please get in touch with the advisors listed under each topic. Please note that some of these might already be taken as indicated.

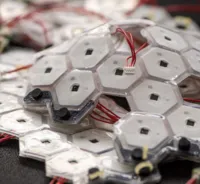

Reaching into the unknown with robot skin

Advisor: Simon Armleder, Constantin Uhde

The central question of this project is what kind of information we can reliably extract from the robot skin mounted on a robotic arm about its environment (e.g. objects, surfaces).

The setup could for example include a camera that records the robot while it’s interacting with the environment and the tactile responses of the skin. During run-time, we remove the camera and check what can be reconstructed from the skin only.

Requirements:

- Knowledge about kinematic of robotics systems

- Knowledge in control

- Experience in C++ and python

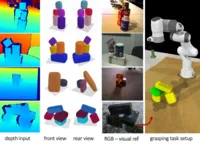

Shape detection in PointClouds (for Robotic Applications) (already taken)

Advisor: Constantin Uhde

- State of the art

- Detecting unknown shapes

- Types of prior knowledge

- Shape representations

References:

[1] Landgraf, Zoe, et al. "SIMstack: A Generative Shape and Instance Model for Unordered Object Stacks." arXiv preprint arXiv:2103.16442 (2021).

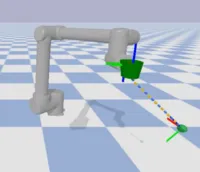

Modeling of high acceleration tasks in PyBullet

Advisor: Simon Armleder

The goal of this project is model a high acceleration task (such as e.g. juggling, pan cake flip, …) within the physics simulator pyBullet. A policy search strategy (e.g. PoWer, PI2, eREPS) can then be used to let the robot learn that skill.

Requirements:

- Knowledge in python

- Knowledge in robotics

Reduce impact force with Robot Skin

Advisor: Simon Armleder, Julio Rogelio Guadarrama Olvera

The goal of this project is to explore the capabilities of the robotic skin for reducing impact forces during collisions. The skin system has integrated proximity sensors that can deliver information about collision situations before they are going to happen. A robot could use this knowledge to lower the relative velocity between its link and the approaching object to prevent damage.

This is an advantage compared to standard robots with embedded joint torque sensors which can only react after the collision already happened.

Requirements:

- Knowledge about kinematic and dynamic of robotics systems

- Knowledge in control

- Experience in C++

Property detection in Objects (using PointClouds) (already taken)

Advisor: Constantin Uhde

- State of the art

- Semantic Segmentation

- Detecting properties in unknow objects

- Property Representations

- Types of prior knowledge

References:

[1] Schoeler, Markus and Florentin Wörgötter. “Bootstrapping the Semantics of Tools: Affordance Analysis of Real World Objects on

a Per-part Basis.” IEEE Transactions on Cognitive and Developmental Systems 8 (2016): 84-98.

Upper-limb Exoskeleton Simulation in Pybullet (already taken)

Advisor: Natalia Paredes Acuña

Description: Simulations of human-robot interactions present numerous advantages, including gaining insight into the system performance and minimizing the risks of physical interaction between people and robots. Assistive Gym [1] is a physics simulation framework for assistive robots that include robotic manipulators to assist a person with activities of daily living (ADL) in different environments. It is based on the physics engine Bullet Physics SDK, real-time collision detection, and multi-physics simulation for VR, games, visual effects, robotics, machine learning, etc. [2]. This project aims to implement a simulation environment taking advantage of the Assistive Gym framework, where the robot manipulator is a wearable upper-limb exoskeleton.

References:

[1] Zackory Erickson, Vamsee Gangaram, Ariel Kapusta, C. Karen Liu, and Charles C. Kemp, “Assistive Gym: A Physics Simulation Framework for Assistive Robotics”, IEEE International Conference on Robotics and Automation (ICRA), 2020. www.youtube.com/watch

[2] Erwin Coumans and Yunfei Bai. PyBullet, a Python module for physics simulation for games, robotics and machine learning. 2016-2020. pybullet.org

LIPM walking controller for the reem-c robot on the mc-rtc control frameweork

Advisor: Julio Rogelio Guadarrama Olvera

In previous projects, we have successfully implemented the reemc robot in the mc-rtc framework [1,2]. Now the aim is to make it walk using the lipm walking controller implemented by Caron et al. [3]. This project will enable locomotion functionality for implementing new behaviors in the mc_rtc framework. It will also help test the implementation of the reemc robot in the mc_rtc framework and will provide another option of walking controller for performance evaluation of future implementations in our humanoid robot the H1.

References:

[1] Kheddar, A., Caron, S., Gergondet, P., Comport, A., Tanguy, A., Ott, C., ... & Wieber, P. B. (2019). Humanoid robots in aircraft manufacturing: The airbus use cases. IEEE Robotics & Automation Magazine, 26(4), 30-45.

[2] https://jrl-umi3218.github.io/mc_rtc/index.html

[3] Caron, S., Kheddar, A., & Tempier, O. (2019, May). Stair climbing stabilization of the HRP-4 humanoid robot using whole-body admittance control. In 2019 International Conference on Robotics and Automation (ICRA) (pp. 277-283). IEEE.

Smart planning of pick and place tasks for WRS “Tidy up there” challenge

Advisor: Julio Rogelio Guadarrama Olvera

Abstract: The “Tidy up there” challenge of the World Robot Summit competition requires a robot to arrange the objects in a room from random places on the ground and an area to specific locations and orientations according to certain object classes. This challenge requires fast and precise pick and place manipulation and in-door navigation. The objectives are two: 1) pick and place as many objects as possible in 15 minutes, 2) maximize the score where every object sum different according to the difficulty for picking and placing. Therefore, any improvement in achieving these goals will represent a bigger chance of winning the competition. In this context, the proposed topic is to develop a high-level planner that decides the order in which the objects must be sorted in order to reduce the transit time and waiting times. Strategies, as using a tray or picking two objects at the same time, can be used by the planner to improve the results.

Aptitudes: Good programing skills for C++ or python and good coding standards

References:

-

Kang, M., Kwon, Y., & Yoon, S. E. (2018, June). Automated task planning using object arrangement optimization. In 2018 15th International Conference on Ubiquitous Robots (UR) (pp. 334-341). IEEE.

-

Rahman Dabbour, A., Erdem, E., & Patoglu, V. (2019). Object Placement on Cluttered Surfaces: A Nested Local Search Approach. arXiv preprint arXiv:1906.08494.

-

Kang, M., Kwon, Y., & Yoon, S. E. (2018, June). Automated task planning using object arrangement optimization. In 2018 15th International Conference on Ubiquitous Robots (UR) (pp. 334-341). IEEE.

Reem-C robot in mc_rtc control framework

Advisor: Julio Rogelio Guadarrama Olvera

Abstract: The mc_rtc control framework is a useful software stack intended to fast implement whole-body controllers in humanoid robots and mobile platforms. It is based on QP task definition and solving to execute a stack of tasks with a strict or flexible hierarchy. The Reem-C robot is a full-size humanoid robot that runs on the ros_control framework which provides a hardware abstraction layer to implement controllers. This topic requires implementing a bridge between the ros_control framework and the mc_rtc framework for the Reem-C robot.

Aptitudes: Strong programing skills for C++ and good coding standards

References:

- Kheddar, A., Caron, S., Gergondet, P., Comport, A., Tanguy, A., Ott, C., ... & Wieber, P. B. (2019). Humanoid robots in aircraft manufacturing.

- https://jrl-umi3218.github.io/mc_rtc/index.html

- http://wiki.ros.org/ros_control

- http://wiki.ros.org/Robots/REEM-C/Tutorials