Research Agenda at the ATARI Lab

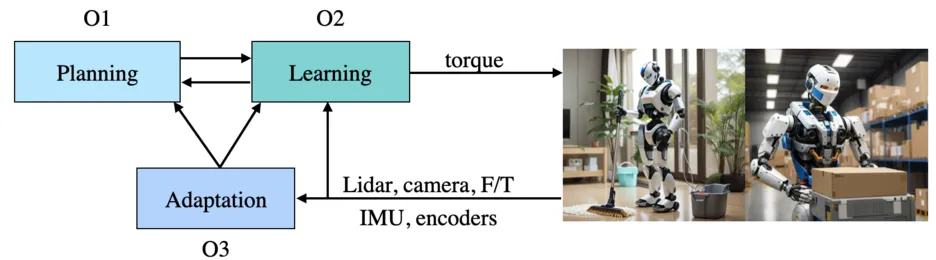

The Applied and Theoretical Aspects of Robot Intelligence (ATARI) Lab envisions a future where humanoid robots autonomously perform complex tasks in dynamic and unstructured environments. Consider a scenario where a robot tidies a cluttered house each day, encountering different configurations and challenges. This vision requires the robot to:

- Plan sequences of actions (O1) in novel situations.

- Leverage learning to offload computaion and continually improve behaviours (O2) while accounting for safety.

- Adapt the behaviour while Interacting with diverse objects and surfaces (O3) reliably and effectively.

To date, no existing framework offers the generality, adaptability, and robustness required for such tasks. At ATARI, we develop foundational principles and frameworks to endow robots with these capabilities. Using techniques from model-based control and trajectory optimization to imitation learning, reinforcement learning, we aim to harness the strengths of both model-based and data-driven paradigms to advance the frontiers of robotics.

To find videos of our research, check out our YouTube Channel.

Planning (O1)

Planning in Novel Situations

Effective planning in unstructured environments involves addressing vast, high-dimensional state spaces and generating feasible action sequences in real time. ATARI Lab investigates methods to efficiently plan in large state spaces and to create algorithms that scale across diverse scenarios.

Discovering the Right Abstractions

The power of abstraction lies in its ability to represent complex systems concisely. In vision, convolutional filters revolutionized learning by embedding spatial hierarchies, while in language, transformers demonstrated the utility of attention mechanisms. In robotics, we hypothesize that contacts with the environment are one of the key abstractions for planning and control for loco-manipulation. Developing algorithms that leverage this (and other) abstraction will enable robots to reason about physical interactions more effectively.

Decomposing Complex, Long-Horizon Tasks

Tasks such as cleaning, or assembling objects involve multiple interdependent subtasks, each requiring precise execution and adaptability. For instance, cleaning a house requires manipulating furnitures, vaccumm cleaning and emptying dishwahers. ATARI’s research focuses on hierarchical task decomposition, encoding this structure in a holistic planning and control framework.

Breaking Free from Rigid Contact Models

Many state-of-the-art robotic models rely on rigid contact assumptions, limiting their applicability in dynamic and deformable environments. While reinforcement learning benefits from domain randomization for robustness, the underlying simulations remain grounded in rigid models. ATARI is exploring hybrid formulations that integrate soft-contact dynamics and probabilistic representations, offering a more nuanced approach to modeling and planning in real-world scenarios.

Optimal Control and Deep Reinforcement Learning

State-of-the-art optimal control and (deep) reinforcement learning (in physics simulation) each have distinct strengths—optimal control leverages the gradients of the simulation to plan efficiently, while deep reinforcement learning samples in simulation to estimate in gradients exceling in robustness. However, neither fully satisfies the needs of general-purpose robots. At ATARI, we investigate hybrid approaches that leverage the strengths of both.

Learning (O2)

The Role of Inductive Biases

Inductive biases shape how learning algorithms prioritize exploration and prune infeasible solutions, reducing sample complexity and facilitating OOD generalizations. In robotics, biases rooted in physics, geometry, or contact dynamics that can accelerate learning while maintaining generality. ATARI explores the design and formalization of task-agnostic inductive biases that facilitate efficient learning without over-constraining exploration.

Training Generalizable Policies

Robot policies must generalize across diverse embodiments and tasks. In imitation learning, a major bottleneck is the lack of large-scale, high-quality data akin to internet-scale datasets for language models. For reinforcement learning, challenges include defining generic goal representations and reward structures that accommodate diverse contact-rich tasks. At ATARI, we develop methods to learn from limited data.

Representation Learning for Robotics

Compact, expressive representations of robotic data are essential for efficient learning and control. While CNNs revolutionized computer vision and transformers have become a standard for sequential data, the optimal representation architecture for robotics remains unclear. We try to identifying these representationsto develop versatile and efficient policies.

Learning Safely in the Real World

Learning directly in the real world remains a formidable challenge due to the high sample complexity of state-of-the-art algorithms, coupled with safety considerations. We explore strategies for safe and efficient real-world learning, aiming to reduce sample complexity while ensuring safety.

Adaptation (O3)

Handling Uncertainty in Real-World Environments

Simulators fail to capture the full spectrum of uncertainties robots face, from material properties to dynamic obstacles. At ATARI, we focus on enabling robots to detect out-of-distribution scenarios and respond reliably through robust policy adaptation and uncertainty-aware decision-making.

Developing Adaptive and Robust Policies

Designing robust and adaptive policies for hybrid dynamical systems are notoriously difficult. On the other hand, current reinforcement learning techniques rely heavily on domain randomization to handle variability, but this approach results in unnecessary conservativeness. Instead, we are investigating adaptive policies that adjust dynamically to new environments. Techniques like adaptive control, meta-reinforcement learning, and contextual policy optimization offer promising pathways for equipping robots with the ability to learn and adapt in real time.

Our Vision

ATARI Lab is committed to advancing robotics research to create robots capable of autonomous loco-manipulation, adaptive planning, and seamless learning in unstructured environments. By addressing the fundamental challenges of planning, learning, and adaptation, we aim to develop the foundational frameworks for a future where humanoid robots are reliable collaborators in dynamic, human-centric settings.