Psychoacoustics

How does our ear hear sound? Can we describe our perception of acoustical sound with computational models? Psychoacoustics establishes the link between the physical world (acoustics) and how we perceive the incoming sound at the ear (perception). Psychoacoustical principles are for example used in “mp3” which leaves out those parts of the audio signal that are deemed inaudible (“masked”) by other parts. Auditory masking is one of our research interests in psychoacoustics (e.g., Pierzycki and Seeber, 2014; Seeber, 2008).

Our key interest is in understanding the amazing ability of the auditory system to analyze the auditory scene. How can we hear out a particular sound from a potpourri of sounds, for example, hear out our conversation partner in a noisy pub? What is the role of monaural and binaural information in that process, both in the normal and the impaired auditory system? Current research topics are the use of temporal fine structure vs envelope information, sound localization in noisy spaces, the spectral and temporal weighting of binaural cues, auditory grouping, and auditory processes to deal with reverberation. The localization of sound sources in reverberant and noisy space is an essential ability for human beings – you don’t need to think as far as evading a predator in a forest (or a car in the town’s jungle), locating your alarm clock in the morning is made tricky enough by the room’s reverberation.

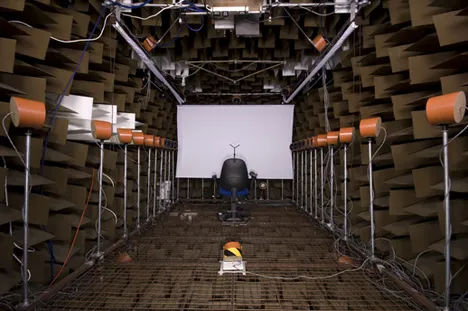

The sound reflects off walls and objects in a room and these reflections interfere with the sound coming directly from the source, making it difficult to extract the information about the source’s direction. Nevertheless, we can usually locate the source correctly. This is attributed to the precedence effect which demonstrates that early reflections have little impact on localization. We study these aspects in the virtual acoustic space of our Simulated Open Field Environment .

Learning the reflection characteristics of rooms

Have you ever observed that after a while in a room the room’s reverberation becomes unnoticed? Reverberation becomes less obvious after being exposed to sound reflections for a while. The auditory system seems to adapt to the reflections [1] which can improve speech understanding [2]. We ask if through learning the room’s reflection pattern the auditory system can improve sound localization in that room as well. Our preliminary results suggest that indeed localization accuracy is slightly better after prior exposure to the room reflections.

We further ask if the auditory system adapts to the pattern of reflections or if room learning occurs on a higher level. Can the auditory system generate and use an abstract representation of the room geometry to improve localization and speech understanding? Testing this hypothesis is tricky and we conduct a range of experiments to be sure.

In cooperation with Prof. Martin Kleinsteuber we use numerical modeling to investigate possible mechanisms for extracting and learning room geometries from the information carried in the sound. We are working on a model that learns room properties from monaural and binaural signals. Our approach exploits the assumption that structure is available in the reflection pattern [3].

[1]: Clifton and Freyman, Perception & Psychophysics 1989.

[2]: Brandewie and Zahorik, J. Acoust. Soc. Am. 2010.

[3]: Kleinsteuber and Shen, IEEE Signal Proc. Lett. 2012

Funding

2013-2015: Bernstein Center for Computational Neuroscience Munich, Transfer project T-2 (BMBF grant 01 GQ 1004A).

Staff

Current:

Lubos Hladek

Past:

Dr. Fritz Menzer

Selected Publications

Menzer, F., and Seeber, B.U.: Perception of scrambled reflections. Fortschritte der Akustik – DAGA '15, Dt. Ges. f. Akustik e.V. (DEGA), 1466 – 1469, 2015.

Menzer, F., and Seeber, B.U.: Does reverberation perception differ in virtual spaces with unrealistic sound reflections? Proc. Forum Acusticum, Krakow, Europ. Acoustics Assoc., 2014.